Context prediction

Table of Contents

A Framework for Context Prediction

Current mobile devices like mobile phones or personal digital assistants have become more and more powerful; they already offer features that only few users are able to exploit to their whole extent. One way to improve usability is to make devices aware of the user’s context, allowing them to adapt to the user instead of forcing the user to adapt to the device. This project is taking this approach one step further by not only reacting to the current context, but also predicting future context, hence making the devices proactive. Mobile devices are generally suited well for this task because they are typically close to the user even when not actively in use. This allows such devices to monitor the user context and act accordingly, like automatically muting ring or signal tones when the user is in a meeting or selecting audio, video or text communication depending on the user’s current occupation. We use an architecture that allows mobile devices to continuously recognize current and anticipate future user context. The major challenges are that context recognition and prediction should be embedded in mobile devices with limited resources, that learning and adaptation should happen on-line without explicit training phases and that user intervention should be kept to a minimum with non-obtrusive user interaction.

Project goal

This was a research project about context prediction, i.e. trying to predict the future context of a user or device. The definition if context that was used within this project is the one by Dey and Abowd, according to which context is “any information which can be used to characterize the situation of an entity”, where an entity is “a person, place or object that is considered relevant to the interaction between a user and an application, including the user and the application themselves”.

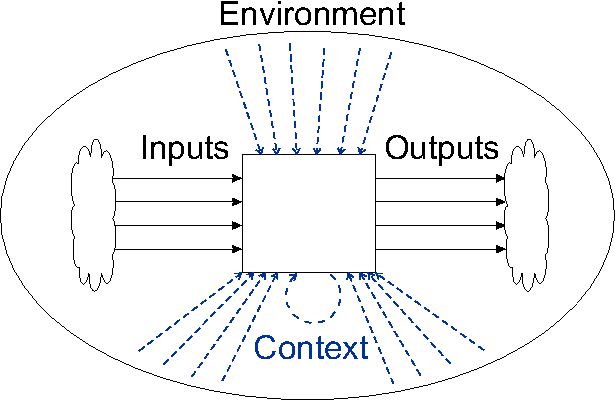

A computer system can be seen as a black box that gets inputs and produces outputs. In this sense, context describes the influence of the whole environment on the system, including the sources of inputs and destinations of outputs, or, as described by Lieberman and Selker, “Context is everything” but “the explicit input and output”. Context is what surrounds a device and thus, all of its possible aspects can not be enumerated for the general case. It even includes the internal state of the system, leading to a form of self-awareness.

The specific problem statement, as defined in my PhD thesis, is:

What are the necessary concepts, architectures, and methods for context prediction in embedded systems?

Thus, the research goal is to evaluate and, if necessary, develop methods for recognizing and predicting context with the limited resources of embedded systems. An important aspect is that such a device must be fully operational at all times.

Current status

This project is finished.

Source code and documentation

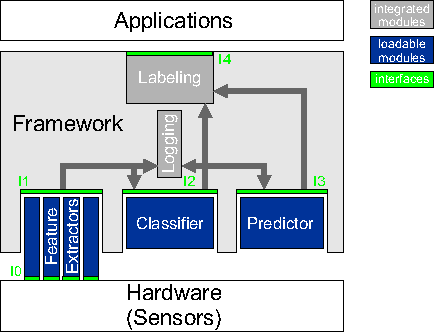

Complete source code for the context prediction framework and the doxygen documentation created from it in HTML or PDF format are available under the terms of the GNU GPL. I am also planning to merge my framework with Kristof van Laerhoven’s Common Sense Toolkit.

Approach

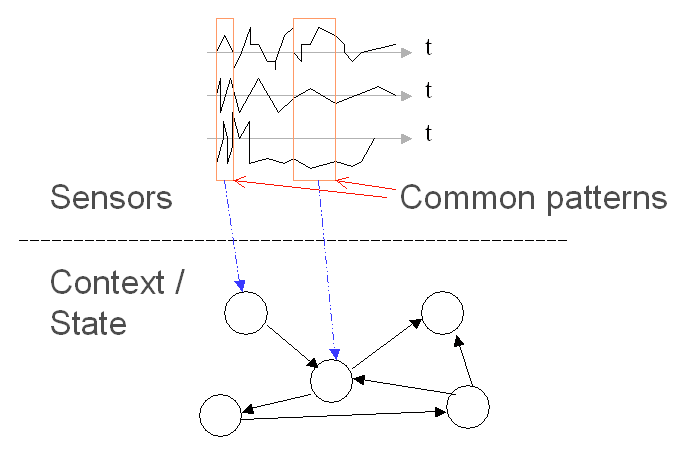

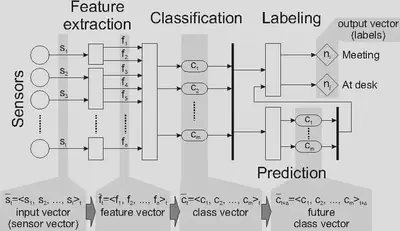

The main idea is to provide software applications not only with information about the current user context, but also with predictions of future user context. Typically, sensor readings will show common patterns because of user’s habits. When equipped with various sensors, an information appliance could classify current situations and, based on those classes, learn the user’s behaviors and habits by deriving knowledge from historical data. The automatically recognized classes of sensor readings can be regarded as states of an abstract state machine; context changes are then similar to state transitions. Our current research focus is to forecast future user context by extrapolating the past.

Context awareness is currently a highly active research topic, but most publications assume few but powerful sensors like video or infrastructure based location-tracking. The FutureAware project takes a different approach to context detection by using multiple diverse sensors, and extends it to also exploit qualitative, non-numerical features. The variety of different sensor types results in a better representation of the users context than a single generic sensor.

The general approach on context prediction taken in this project is to recognize common patterns in sensor time series and interpret these patterns as the context a user or device is currently in. Because situations that are encountered more often result in similar sensor samples each time, pattern recognition techniques can be used to recognize these contexts.

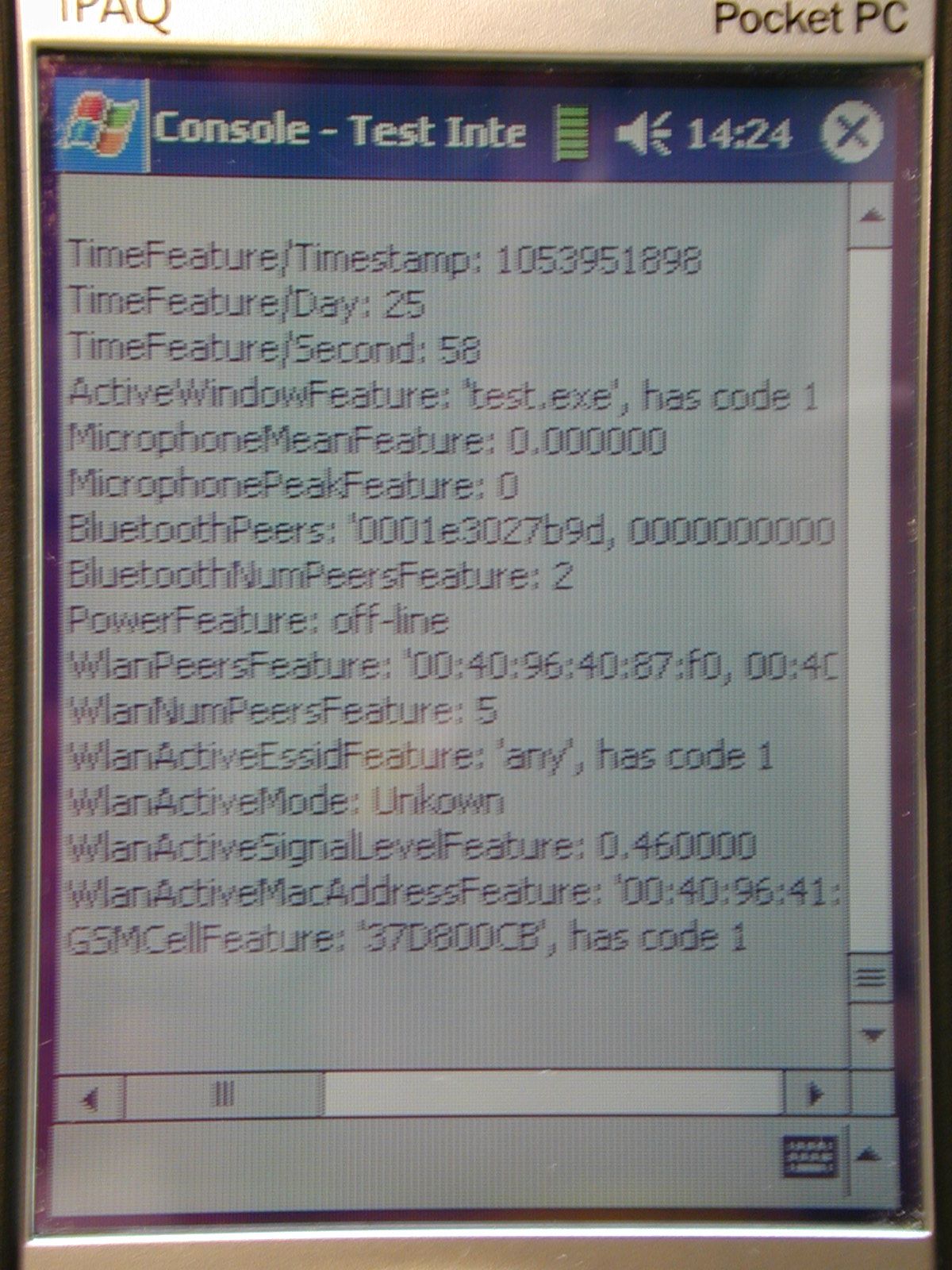

To this end, an architecture for context recognition and prediction has been developed. This architecture is aimed towards embedded systems and continuous operation over arbitrary periods of time. Therefore, on-line computation in all steps of the architecture is one of the major requirements. The architecture has also been implemented as a cross-platform software framework running under Windows 2000/XP, Linux (should compile on any POSIX system), Windows CE, and partially Symbian OS.

First Results

An evaluation on a first real-world data set has been done with the framework as available in the above source code link. This data set was recorded with a standard laptop over about 2 months and includes Bluetooth, WLAN, microphone, the active window, if it was plugged into the charger, and other sensors.

Publications

The following refereed papers, article, book, and PhD thesis have been published in the scope of this project: 1 2 3 4 5 6 7 8 9 10