DNS resolver latency measurements: OPNsense Unbound

Why

Since switching to OPNsense as my main home network firewall/router, I have been having intermittent issues with DNS resolution latency. This has been low priority so far, since the network still works “reasonably well”, but it is annoying when connections occasionally take a few seconds to initially connect - because of DNS latency even before the actual connection is initiated.

My DNS configuration is - historically as well as for other reasons (I like to play with network protocols, so my home network always tends to be quite a bit more complicated than it strictly needs to be…) - complex. Ideally, I want all these aspects covered:

- Resolution of local hostnames managed by DHCP, with:

- both statically configured with a MAC/IP mapping and dynamically from a pool

- both for IPv4 and IPv6

- Local overrides of hostnames (to, e.g., redirect LAN clients to the local DMZ for services hosted there instead of going through some NAT recflection constructions) and domains (to, e.g., resolve internal-only domains with local DNS servers or others reachable only through a VPN).

- Network-wide malware-, tracker-, and ad-blocking with DNS filter lists (in addition to e.g. uBlock extensions on desktop/mobile browsers, this tackles devices like TVs and other IoT/smarthome stuff).

- DNS integrity with DNSSec - yes, it’s far from perfect, but it tackles at least some threats.

- (Potentially) DNS privacy against ISP sniffing by using (privacy respecting) upstream DNS resolvers with an encrypted first hop protocol like DNS-over-TLS (DoT), DNS-over-HTTPS (DoH), or DNS-over-QUIC (DoQ). Certificates should be checked, or ISPs can actively MITM the connections.

Unbound itself as well as the OPNsense web interface supports all these options, so it is in principle a good match for my needs. Note that I had all this running with a combination of dnsmasq on OpenWRT and a PiHole running on Docker on my homeserver before, and it worked very well without any noticeable latency. So the switch to OPNSense was actually a regression in terms of DNS stability.

Nonetheless, to remove one external dependency (the PiHole instance running on another machine) means potentially fewer parts that can go wrong. So let’s try to fix the OPNsense Unbound latency issue.

How

For the following performance measurements, I used dnsperf version 2.9.0 in the Debian package version included in Ubuntu Jammy 22.04.1 LTS. Actually running the resperf-report binary requires installing the gnuplot and an outdated libssl_1.1.1f package, though. Dependencies of the dnsperf package should probably be fixed at some point…

As test data, I captured outgoing DNS queries some time last year and tried to produce “representative” traffic from the local network over a short period. Then the query list was extracted with

queryparse -i ~/dnstraffic.pcap -o ~/dnsqueries.list

The resulting list of queries contains 23934 entries with a mix of A, AAAA, PTR, SRV, TXT, and DNSsec query types. Unfortunately, while this localized list of queries is a good starter for dnsperf, it seems that resperf needs a lot more queries. In the end, I combined my localized list with sample data from DNS-OARC:

cat ~/dnsqueries.list queryfile-example-10million-201202_part01 > testqueries.list && wc -l testqueries.list –> 1023934 lines

Measurements were taken with my 23934 + 1 million queries from the first sample file as input and less aggressive query rate as described in the results section below:

resperf-report -s <local firewall IP address> -d testqueries.list -L 10 -q 4000 -t 120

Before every run, unbound on OPNsense (running on an APU4d4) was restarted to clear in-memory caches.

CrowdSec, Suricata, and other CPU-intensive services applied to all traffic are not active, but an OpenVPN server and a Wireguard tunnel are. DNS traffic should however not go through them.

The client executing resperf was a Linux desktop connected to the firewall via a 1GBps Ethernet LAN with average ping latency of less than 0.5ms.

CPU usage is about 1% in idle. While running the (baseline, without blocklists) DNS resolver tests, it jumps initially to 100% (unbound process), then to a maximum of around 30%, but only occasionally.

Configuration variants: Basics

All measured unbound configuration variants had these basic options set in the OPNsense admin interface:

- General:

- “Enable Unbound” (Duh…)

- “Network Interfaces”: “All (recommended)”

- unchecked “Enable DNS64 Support”

- “Register DHCP leases”

- “Register DHCP static mappings”

- “Register IPv6 link-local addresses”

- unchecked “Do not register system A/AAAA records”

- “Create corresponding TXT records”

- unchecked “Flush DNS cache during reload”

- Overrides: configured 2 host overrides, 2 aliases, and 7 active domain overrides

- Advanced:

-

“Prefetch Support”

-

“Prefetch DNS Key Support”

-

unchecked “Harden DNSSEC Data”

-

unchecked “Serve Expired Responses”

-

unchecked “Strict QNAME Minimisation”

-

unchecked “Extended Statistics”

Note: This was on for the first measurements, but I turned it off for the updated baseline and all other variants.

-

unchecked “Log Queries”

-

unchecked “Log Replies”

-

unchecked “Tag Queries and Replies”

-

“Log Level Verbosity”: “Level 1 (Default)”

-

all the rest left empty/default

-

Configuration variant 1: no DNSsec, blocklist disabled, no DoT upstream resolvers

All other advanced options are disabled for this one. I call it baseline and it should be as fast as reasonably possible, as I would not expect a few local entries/overrides to be measureable. In addition to the overrides specified in the unbound configuration, hostnames taken from DHCP config in /var/unbound/host_entries.conf are only 182 lines, so that really shouldn’t have an impact (if it does, then unbound is horribly inefficient).

Configuration variant 2: no DNSsec, blocklist disabled, Quad9 as DoT upstream resolver

Enabled the following DoT servers:

- DNS over TLS:

- address 9.9.9.9, port 853, hostname dns.quad9.net

- address 2620:fe::fe, port 853, hostname dns.quad9.net

- address 149.112.112.112, port 853, hostname dns.quad9.net

- address 2620:fe::9, port 853, hostname dns.quad9.net

Why exactly Quad9? Of all the major ones, I like their privacy policy best, and they are comparably fast to the others from this vantage point.

Configuration variant 3: DNSsec validation enabled, blocklist disabled, Quad9 as DoT upstream resolver

In addition to configured upstream DoT servers from variant 2, enabled:

- General:

- “Enable DNSSEC Support”

Configuration variants 4a-d: DNSsec validation enabled, different blocklists enabled, Quad9 as DoT upstream resolver

In addition to all of the above, now with enabling blocklists:

- Blocklist:

- “Enable”

- “Type of DNSBL”:

- Variant 4a “avoid the baddies”: Abuse.ch + Blocklist.site Phishing –> 201188 entries on blocklist

- Variant 4b “also avoid the tracking”: 4a + Blocklist site Tracking + Blocklist.site Ransomware + EasyPrivacy + Simple Tracker List –> added 35482 entries to blocklist

- Variant 4c “and I don’t like ads either”: 4b + Blocklist.site Ads + Simple Ad List –> added 148050 entries to blocklist

- Variant 4d “all in”: 4c + AdAway List + AdGuard List –> added 32566 entries to blocklist with 417286 total entries (and 343M SIZE / 235M RES instead of 147M / 60M without any blocklists)

Results

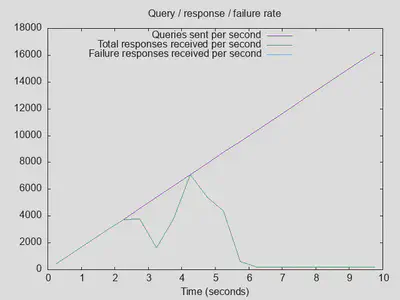

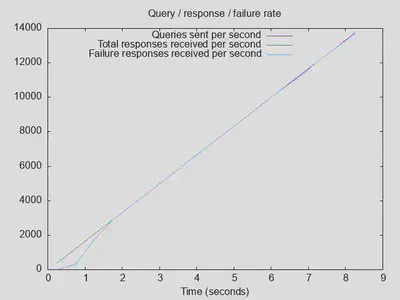

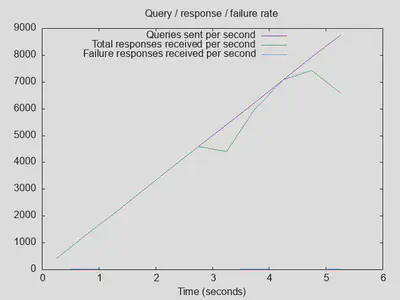

The first baseline run with resperf default parameters (no slowdown set) was … not good. After about 5 seconds, queries were basically dropped with latency spiking to 4 seconds and responses going towards zero:

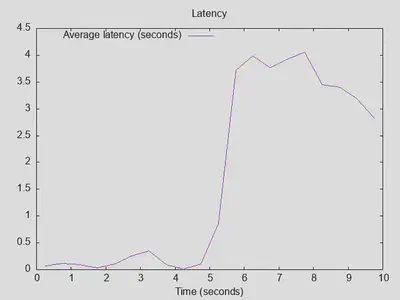

As a first try for optimization, I disabled “Extended Statistics” under Advanced options. This brings latency down to about 2 seconds, maximum throughput went up about 10%, and queries completed is up by about 20%. Better, but the drop after 5 seconds is still there:

So, first lesson learned: “Extended Statistics” is best kept off.

Next optimization options are the number of queries per thread - which is a hard limit on parallel queries with 4 threads as used on the APU4d4 - and the message cache. Increasing the number of queries to 8192 (double the default 4096) and correspondingly doubling the outgoing range to 16384 brought no significant change, so I reset them back to the defaults of 4096 and 8192, respectively. Increasing message cache size to 64m and RRset cache size to 128m brought a slower ramp-up to 100% CPU usage of the unbound process together with a later ramp-down back to around 0% while resperf was still running. Again, no significant change, and I reset the values back to my previous 16m message and 64m RRset cache size.

Even reducing “Jostle Timeout” from the default of 200ms to 50ms brought no real change, though I expected it to relieve the open connections pressure somewhat.

For ruling out CPU issues, unbound with the same configuration was also run on a Proxmox VM as explained in more detail in this post with no significantly different results.

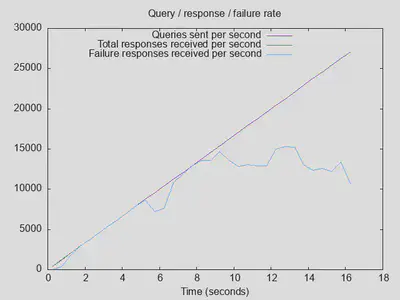

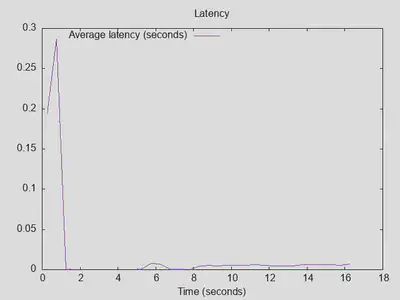

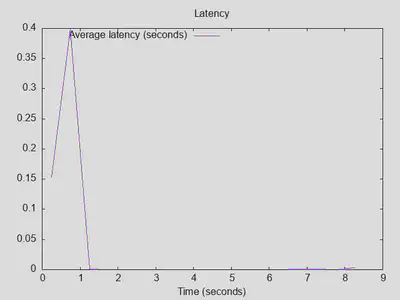

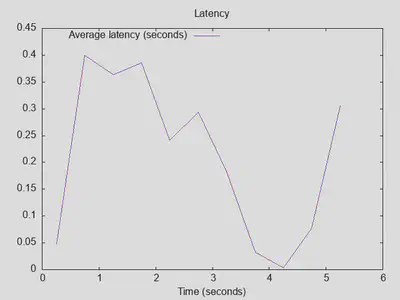

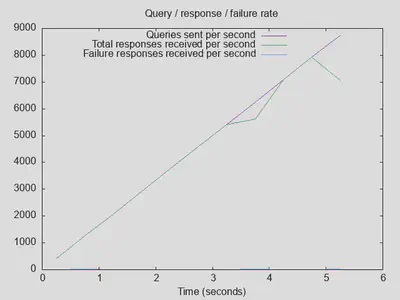

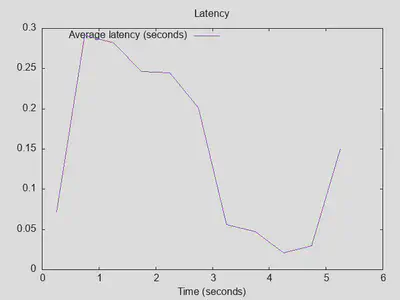

Just for comparison, I also ran the same test on PiHole running in a Docker container on the same Proxmox host. The results (not a completely fair comparison, as PiHole is already using fast upstream DoT resolvers, but is applying all its blocklists) are much better, with over 160000 instead of 25000 queries actually completed, a throughput of over 15000 instead of about 7500 queries per second, and latency never above 0.3s:

However, PiHole returns a REFUSED error code for nearly 99% of all queries, so there might be some explicit rate limiting going on here. That is, PiHole returns results very quickly, but maybe not correct ones! unbound returned about 55% NXDOMAIN error codes with these settings, and in absolute numbers significantly more NOERROR results (over 11000) compared to PiHole (499).

Lesson two: PiHole with dnsmasq with blocklists enabled and a DoT upstream is about twice as fast as unbound without blocklists when loaded with a large number of parallel/outstanding queries, but it might be failing most of these queries

Variant 1: baseline

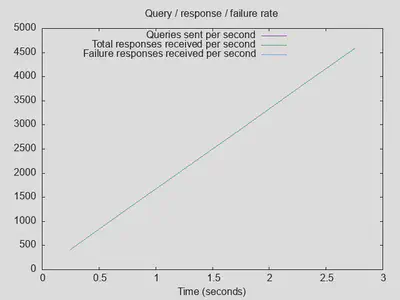

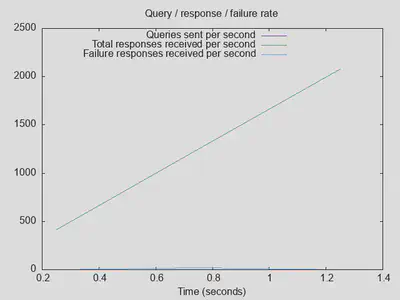

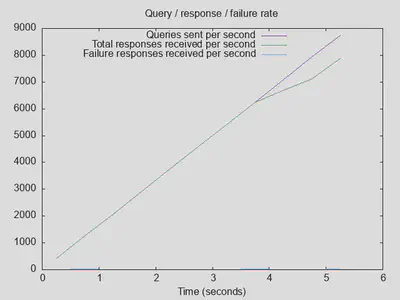

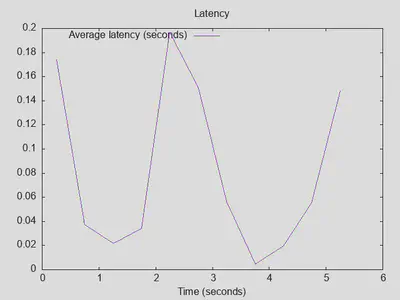

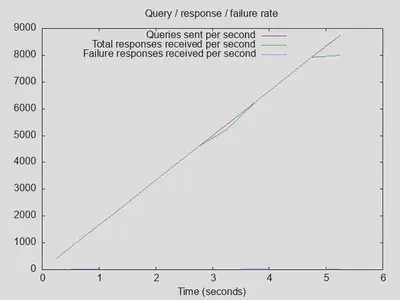

However, this is a pretty agressive test and not necessarily representative of a small home network. Therefore, I slowed down resperf to sligthly more moderate query speeds with -L 10 -q 1000 -t 120 (maximum query loss 10%, maximum 1000 outstanding queries, but run for up to 120 seconds after ramp-up for more queries that can be completed at that rate). Note that over 10000 outstanding queries should be possible with unbound configured for 4096 open queries per thread on each of the 4 threads. Much more than that necessarily means losing parallel open queries due to the unbound thread pool design. However, I also tried with 5000 and 10000 outstanding queries, and saw a drop at the end of the line and some (less than 0.1%, but still) SERVFAIL responses. So, for the baseline completely stable with 0% SERVFAIL and no drop in responses received rate, I kept measuring with up to 1000 outstanding queries at that point. As mentioned, I don’t think this is representative of typical small to medium sized networks, so I am ok with these test parameters going forward for debugging and optimizing performance issues. The new baseline at this point (on the APU4d4, after an unbound restart for cache reset) was:

To double check, running the same test on the OPNsense Proxmox VM yielded no significant differences (slighly more completed queries, maximum latency actually a bit higher, but that probably falls under measument noise).

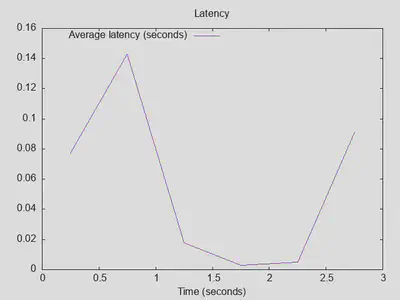

Again, comparing these test parameters on PiHole brings increased throughput and number of queries completed with an incredibly stable latency (however, absolute latency is still very low on both with a maximum of around 100ms after initial ramp-up):

The same issue with returned error codes remains: unbound returned either successful NOERROR (35%) or unsuccessful NXDOMAIN (65%) replies, while PiHole returned mostly REFUSED replies (nearly 99%). unbound managed to answer nearly 3000 queries with NOERROR, PiHole only 522.

Lesson three: Pihole is still faster at returning (refused) replies, but with moderate query speeds, both unbound and PiHole can provide really good latencies of around 100ms for uncached queries

Variant 2: DoT upstream resolvers

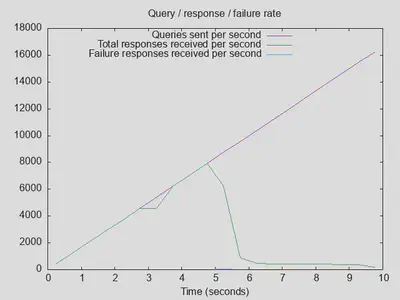

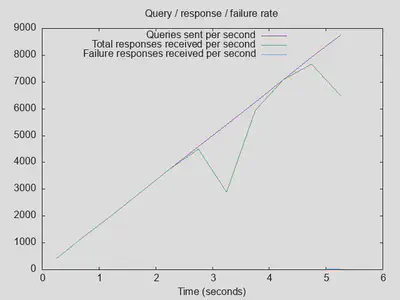

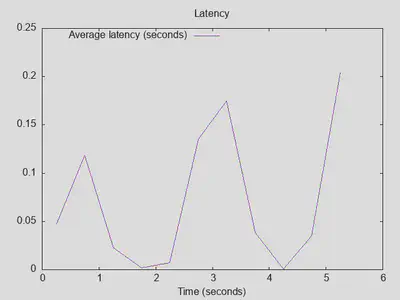

Very unexpected results with Quad9 as upstream DoT resolver, reaching 1000 outstanding queries after 3 seconds with only about a third of the number of completed queries and about triple latency:

Running resperf with -q 4000, i.e. up to 4000 instead of 1000 outstanding queries, brought results back to normal:

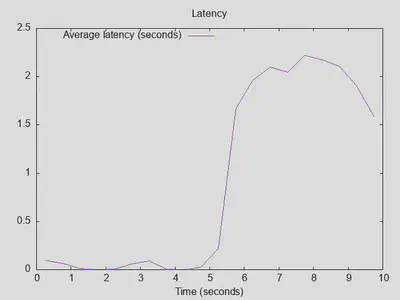

Comparing it to the baseline setup without DoT upstream servers reveals that having a fast, stable upstream resolver actually improves matters (25000 completed queries with DoT vs. 22500 without, throughput improved by about 50%, and latency with DoT is comparable):

Lesson four: DoT with a fast upstream can be beneficial, and it doesn’t affect latency much

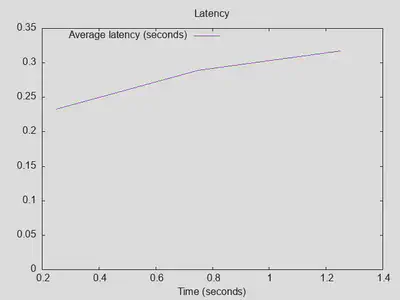

Variant 3: DoT + DNSsec validation

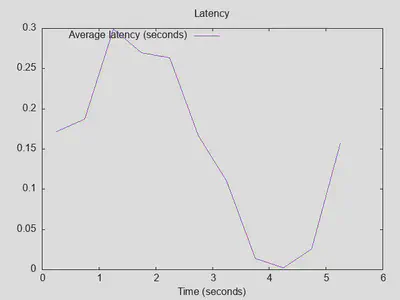

DNSsec validation doesn’t signficantly influence throughput or number of replies (bringing it to roughly the level of the baseline without DoT), but latency roughly doubles:

Lesson four: DNSsec validation adds about 100-200ms latency, which may or may not be noticable

Variant 4: DoT + DNSsec + various blocklists

Surprisingly, adding some blocklist filtering within unbound does not show any significant changes, and actually seems to slightly increase throughput and lower maximum latency - both probably within the bounds of measurement noise (4a):

Variants 4b and 4c did not change results in any significant way, besides showing a slight increase in the initial latency (but not really in sustained average latency, so considering measurement noise again). With 4d, throughput decreased again slightly, but the overall difference between 4a and 4d is not large:

Bonus variant: DoT + all-in blocklists, but disabled DNSsec validation

As one of the few potentially significant changes causing an increase in latency was enabling DNSsec validation in unbound, I also tried variant 4d but with just DNSsec support disabled. While this slightly improved the total number of completed queries, latency did not actually improve. This indicates that unbound potentially parallelizes DNSsec validation and blocklist filtering, but I have not actually checked if this is what happens. The lesson lerned here is that DNSsec validation is nearly for free (in terms of latency) when blocklists are in use. Only when both were off did I measure a significant decrease in latency.

Conclusions

OPNsense with its embedded unbound can actually perform well as a DNS resolver under controlled conditions, and surprisingly adding a decent set of blocklists doesn’t noticably increase latency. DoT upstream resolvers, if fast and stable, are not a problem and can actually increase throughput a bit (which is not surprising, assuming that they have massive caches). If the upstream DoT resolver already performs DNSsec validation (and you trust it to do so), then disabling local DNSsec validation in unbound would not change the overall security level. On the other hand, local DNSsec validation can be a cause for additional failure if, e.g., local keys are not up-to-date or any other subtle problems.

Given these results, I will actually leave DoT and the blocklists mentioned above as well as DNSsec validation enabled for now. During the controlled resperf measurement, unbound performed pretty well with the settings documented above (e.g., I learned to disable extended statistics).

Nonetheless, I will keep watching it carefully, as I have had problems with unbound becoming worse both in latency and reply failure rates over time. Potentially there are some unresolved memory/cache/disk storage leaks or other issues that accumulate only slowly. If it keeps bugging me, I might just add a cronjob to restart unbound every night and see if that solves all remaining stability issues.